Knowledge Engineering¶

Symbols¶

-

[…]

URI are symbols:

Linguistics https://en.wikipedia.org/wiki/Linguistics

Character encoding¶

Control Characters¶

Warning

Control characters are often significant.

Common security errors involving control characters:

https://cwe.mitre.org/data/definitions/74.html

CWE-74: Improper Neutralization of Special Elements in Output Used by a Downstream Component (‘Injection’)

https://cwe.mitre.org/data/definitions/93.html

CWE-93: Improper Neutralization of CRLF Sequences (‘CRLF Injection’)

x = "line1_start" x2 = "thing\r\n\0line1_end" x = x + x2 x = x + "line2...line2_end\n" records = x.splitlines() # ! error

https://cwe.mitre.org/data/definitions/140.html

CWE-140: Improper Neutralization of Delimiters

https://cwe.mitre.org/data/definitions/141.html

CWE-141: Improper Neutralization of Parameter/Argument Delimiters

https://cwe.mitre.org/data/definitions/142.html

CWE-142: Improper Neutralization of Value Delimiters

https://cwe.mitre.org/data/definitions/143.html

CWE-143: Improper Neutralization of Record Delimiters

https://cwe.mitre.org/data/definitions/144.html

CWE-144: Improper Neutralization of Line Delimiters

https://cwe.mitre.org/data/definitions/145.html

CWE-145: Improper Neutralization of Section Delimiters

Escape Sequences¶

https://en.wikipedia.org/wiki/Escape_sequences_in_C#Table_of_escape_sequences

-

& < > /> " <!-- --> <![CDATA[ ]]> # HTML & Templates <p id="{{attr}}">text</p> # attr='here"s one' Python escape sequences:

s = "Here's one" s = 'Here\'s one' s = '''Here's one''' s = 'Here\N{APOSTROPHE}s one' s = 'Here'"'s"' one'

Bash escape sequences:

s1="$Here's one" s1="${Here}'s one" s2='${Here}\'s one' # ! error s2='${Here}'"'s"' one' s3=""$Here"'s one" s3=""${Here}"'s one"

ASCII¶

ASCII (American Standard Code for Information Exchance) defines 128 characters.

Python:

from __future__ import print_function for i in range(0,128): print("{0:<3d} {1!r} {1:s}.".format(i, chr(i)))

Unicode¶

https://en.wikipedia.org/wiki/Unicode_symbols#Symbol_block_list

Entering Unicode Symbols:

∴ – Therefore –

u+2234X11:

ctrl-shift-u 2234Vim:

ctrl-v u2234-

Python 3 Unicode HOWTO: https://docs.python.org/3/howto/unicode.html

Python 2 Unicode HOWTO: https://docs.python.org/2/howto/unicode.html

c1 = u'∴' # Python 2.6-3.2, 3.4+ c2 = '∴' # Python 3.0+ c3 = '\N{THEREFORE}' # howto/unicode#the-string-type glyph name u1 = unichr(0x2234) # Python 2+ u2 = chr(0x2234) # Python 3.0+ from builtins import chr # Python 2 & 3 u3 = chr(0x2234) # Python 2 & 3 u4 = chr(8756) # int(hex(8756)[2:], 16) == 8756 (0x2234) chars = [c1, c2, u1, u2, u3, u4] from operator import eq assert all((eq(x, chars[0]) for x in chars))

Python and UTF-8:

Python 2 Codecs docs: https://docs.python.org/2/library/codecs.html

-

# Read an assumed UTF-8 encoded JSON file with Python 2+, 3+ import codecs with codecs.open('filename.json', encoding='utf8') as file_: text = file_.read()

Unicode encodings:

UTF-1

UTF-5

UTF-6

UTF-9, UTF-18

UTF-16

UTF-32

UTF-8¶

UTF-8 is a Unicode Character encoding which can represent all Unicode symbols with 8-bit code units.

In 2015, UTF-8 is the most common web character encoding.

Why use UTF-8? https://www.w3.org/International/questions/qa-choosing-encodings#useunicode

Logic, Reasoning, and Inference¶

https://en.wikipedia.org/wiki/Epistemology

Logic¶

See:

Set Theory¶

Boolean Algebra¶

Many-valued Logic¶

Three-valued Logic¶

{ True, False, Unknown }

{ T, F, NULL } # SQL

{ T, F, None } # Python

{ T, F, nil } # Ruby

{ 1, 0, -1 } #

Fuzzy Logic¶

Probabilistic Logic¶

Propositional Calculus¶

Premise

PConclusion

Q

Modus ponens¶

P -> Q– Premise 1P1P_1(“P sub 1”)

P– Premise 2P2P_2(“P sub 2”)

∴ Q– ConclusionQQ_0(“Q sub 0”)

Predicate Logic¶

Universe of discourse

Predicate

∃ – There exists – Existential quantifier

∀ – For all – Universal quantifier

Existential quantification¶

∃ – “There exists” is the Existential quantifier symbol.

An existential quantifier is true (“holds true”) if there is one (or more) example in which the condition holds true.

An existential quantifier is satisfied by one (or more) examples.

Universal quantification¶

∀ – “For all” is the Universal quantifier symbol.

A universal quantification is disproven by one counterexample where the condition does not hold true.

disproven by one counterexample.

Hoare Logic¶

precondition

Pcommand

Cpostcondition

Q

See:

Propositional Calculus, Predicate Logic

First-order Logic¶

First-order logic (FOL)

Terms

Variables

x,y,zx,x_0(“x subscript 0”, “x sub 0”)

Functions

f(x)– function symbol (arity 1)a– constant symbol (arity 0) (a())

Formulas (“formulae”)

Equality

=– equality

Logical Connectives (“unary”, “binary”, sequence/tuple/list)

¬–~,!– negation (unary)…

∧–^,&&,and– conjunction∨–v,||,or– disjunction→–->,⊃– implication↔–<->,≡– biconditional…

XORNAND

Grouping Operators

Parentheses

( )Brackets

< >

Relations

P(x)– predicate symbol (n_args=1, arity 1, valence 1)R(x)– relation symbol (n_args=1, arity 1, valence 1)Q(x,y)– binary predicate/relation symbol (n_args=2, …)

Quantifier Symbols “universe relation”

Description Logic¶

Description Logic (DL; DLP (Description Logic Programming))

Knowledge Base = TBox + ABox

https://en.wikipedia.org/wiki/TBox (Schema: Class/Property Ontology)

https://en.wikipedia.org/wiki/ABox (Facts / Instances)

See:

N3 for

=>implies

Reasoning¶

https://en.wikipedia.org/wiki/Deductive_reasoning

https://en.wikipedia.org/wiki/Category:Reasoning

https://en.wikipedia.org/wiki/Semantic_reasoner

See: Description Logic

Inference¶

https://en.wikipedia.org/wiki/Category:Statistical_inference (Logic + Math)

Entailment¶

See: Data Science

Data Engineering¶

Data Engineering is about the 5 Ws (who, what, when, where, why) and how data are stored.

@westurner ;File Structures¶

https://en.wikipedia.org/wiki/File_format

https://en.wikipedia.org/wiki/Record_(computer_science)

https://en.wikipedia.org/wiki/Field_(computer_science)

https://en.wikipedia.org/wiki/Index#Computer_science

tar and zip are file structures that have a manifest and a payload

Filesystems often have redundant manifests (and/or deduplication according to a hash table manifest with an interface like a DHT)

Web Standards and Semantic Web Standards which define file structures (and stream protocols):

Git File Structures¶

Git specifies a number of file structures: Git Objects, Git References, and Git Packfiles.

Git implements something like on-disk shared snapshot objects with commits, branching, merging, and multi-protocol push/pull semantics: https://en.wikipedia.org/wiki/Shared_snapshot_objects

Git Object¶

Git Reference¶

Git Packfile¶

“Git is a content-addressable filesystem”

bup¶

Bup (backup) is a backup system based on git packfiles and rolling checksums.

[bup is a very] efficient backup system based on the Git Packfile format, providing fast incremental saves and global deduplication (among and within files, including virtual machine images).

Torrent file structure¶

A bittorrent torrent file is an encoded manifest of tracker, DHT, and web seed URIs; and segment checksum hashes.

Like MPEG-DASH and HTTP Live Streaming, BitTorrent downloads file segments over HTTP.

File Locking¶

File locking is one strategy for synchronization with concurrency and parallelism.

An auxilliary

<filename>.lockfile is still susceptible to race conditionsC file locking functions:

fcntl,lockf,flockPython file locking functions:

fcntl.fcntl,fcntl.lockf,fcntl.flock: https://docs.python.org/2/library/fcntl.htmlTo lock a file for all processes with Linux requires a mandatory file locking mount option (mount -o mand`) and per-file setgid and noexec bits (

chmod g+s,g-s).To lock a file (or a range / record of a file) for all processes with Windows requires no additional work beyond

win32con.LOCKFILE_EXCLUSIVE_LOCK,win32file.LockFileEx, andwin32file.UnlockFileEx.CWE-667: Improper Locking: https://cwe.mitre.org/data/definitions/667.html#Relationships

Data Structures¶

Arrays¶

An array is a data structure for unidimensional data.

Arrays must be resized when data grows beyond the initial shape of the array.

Sparse arrays are sparsely allocated.

A multidimensional array is said to be a matrix.

Matrices¶

A matrix is a data structure for multidimensional data; a multidimensional array.

Lists¶

A list is a data structure with nodes that link to a next and/or previous node.

Graphs¶

A graph is a system of nodes connected by edges; an abstract data type for which there are a number of suitable data structures.

A node has edges.

An edge connects nodes.

Edges of directed graphs flow in only one direction; and so require two edges with separate attributes (e.g. ‘magnitude’, ‘scale’

Wikipedia: https://en.wikipedia.org/wiki/Directed_graphEdges of an undirected graph connect nodes in both directions (with the same attributes).

Graphs and Trees are traversed (or walked); according to a given algorithm (e.g. DFS, BFS).

Graph nodes can be listed in many different orders (or with a given ordering):

Preoder

Inorder

Postorder

Level-order

There are many data structure representatations for Graphs.

There are many data serialization/marshalling formats for graphs:

Graph edge lists can be stored as adjacency matrices.

NetworkX supports a number of graph storage formats.

RDF is a standard semantic web Linked Data format for Graphs.

JSON-LD is a standard semantic web Linked Data format for Graphs.

There are many Graph Databases and RDF Triplestores for storing graphs.

A cartesian product has an interesting graph representation. (See Compression Algorithms)

NetworkX¶

NetworkX is an Open Source graph algorithms library written in Python.

DFS¶

DFS (Depth-first search) is a graph traversal algorithm.

# Given a tree:

1

1.1

1.2

2

2.1

2.2

# BFS:

[1, 1.1, 1.2, 2, 2.1, 2.2

See also: Bulk Synchronous Parallel, Firefly Algorithm

BFS¶

BFS (Breadth-first search) is a graph traversal agorithm.

# Given a tree:

1

1.1

1.2

2

2.1

2.2

# BFS:

1, 2, 1.1, 1.2, 2.1, 2.2

[ ] BFS and Bulk Synchronous Parallel

Topological Sorting¶

A DAG (directed acyclic graph) has a topological sorting, or is topologically sorted.

The unix

tsortutility does a topological sorting of a space and newline delimited list of edge labels:

$ tsort --help

Usage: tsort [OPTION] [FILE]

Write totally ordered list consistent with the partial ordering in FILE.

With no FILE, or when FILE is -, read standard input.

--help display this help and exit

--version output version information and exit

GNU coreutils online help: <http://www.gnu.org/software/coreutils/>

For complete documentation, run: info coreutils 'tsort invocation'

$ echo -e '1 2\n2 3\n3 4\n2 a' | tsort

1

2

a

3

4

Installing a set of packages with dependencies is a topological sorting problem; plus e.g. version and platform constraints (as solvable with a SAT constraint satisfaction solver (see Conda (pypi:pycosat)))

A topological sorting can identify the “root” of a directed acyclic graph.

Information gain can be useful for less discrete problems.

Trees¶

A tree is a directed graph.

A tree is said to have branches and leaves; or just nodes.

There are many types of and applications for trees:

Search: Indexing, Lookup

Compression Algorithms¶

bzip2¶

bzip2 is an Open Source lossless compression algorithm

based upon the Burrows-Wheeler algorithm.

gzip¶

.gzgzip is a compression algorithm

based on DEFLATE and LZ77.

gzip is similar to zip, in that both are based upon

DEFLATE

tar¶

.tartar is a file archiving format for storing a manifest of records of a set of files with paths and attributes at the beginning of the actual files all concatenated into one file.

TAR and gzip or bzip2 can be streamed over SSH:

# https://unix.stackexchange.com/a/95994

tar czf - . | ssh remote "( cd ~/ ; cat > file.tar.gz )"

tar bzf - . | ssh remote "( cd ~/ ; cat > file.tar.bz2 )"

zip¶

zip is a lossless file archive compression

Hash Functions¶

Hash functions (or checksums) are one-way functions designed to produce uniquely identifying identifiers for blocks or whole files in order to verify data Integrity.

A hash is the output of a hash function.

In Python,

dictkeys must be hashable (must have a__hash__method).In Java, Scala, and many other languages

dictsare calledHashMaps.MD5 is a checksum algorithm.

SHA is a group of checksum algorithms.

CRC¶

A CRC (Cyclical Redundancy Check) is a hash function for error detection based upon an extra check value.

Hard Drives and SSDs implement CRCs.

Ethernet implements CRCs.

MD5¶

MD5 is a 128-bit hash function which is now broken, and deprecated in favor of SHA-2 or better.

md5

md5sums

SHA¶

SHA-0 – 160 bit (retracted 1993)

SHA-1 — 160 bit (deprecated 2010)

SHA-2 — sha-256, sha-512

SHA-3 (2012)

shasum

shasum -a 1

shasum -a 224

shasum -a 256

shasum -a 384

shasum -a 512

shasum -a 512224

shasum -a 512256

Filesystems¶

Filesystems (file systems) determine how files are represented in a persistent physical medium.

On-disk filesystems determine where and how redundantly data is stored

Network Filesystems link disk storage pools with other resources (e.g. NFS, Ceph, GlusterFS)

RAID¶

RAID (redundant array of independent disks) is set of configurations for Hard Drives and SSDs to stripe and/or mirror with parity.

RAID 0 -- striping, -, no parity ... throughput

RAID 1 -- no striping, mirroring, no parity ...

RAID 2 -- bit striping, -, no parity ... legacy

RAID 3 -- byte striping, -, dedicated parity ... uncommon

RAID 4 -- block striping, -, dedicated parity

RAID 5 -- block striping, -, distributed parity ... min. 3; n-1 rebuild

RAID 6 -- block striping, -, 2x distributed parity

RAID Implementations:

RAID may be implemented by a physical controller with multiple drive connectors.

RAID may be implemented as a BIOS setting.

https://en.wikipedia.org/wiki/RAID#Firmware-_and_driver-based (”fake RAID”)

- Data Scrubbing

Data scrubbing is a technique for checking for inconsistencies between redundant copies of data

Data scrubbing is routinely part of RAID (with mirrors and/or parity bits).

MBR¶

MBR (Master Boot Record) is a boot record format and a file partition scheme.

DOS and Windows use MBR partition tables.

Many/most UNIX variants support MBR partition tables.

Linux supports MBR partition tables.

Most PCs since 1983 boot from MBR partition tables.

When a PC boots, it reads the MBR on the first configured drive in order to determine where to find the bootloader.

GPT¶

GPT (GUID Partition Table) is a boot record format and a file partition scheme wherein partitions are assigned GUIDs (Globally Unique Identifiers).

OS X uses GPT partition tables.

Linux supports GPT partition tables.

https://en.wikipedia.org/wiki/GUID_Partition_Table#UNIX_and_Unix-like_operating_systems

LVM¶

LVM (Logical Volume Manager) is an Open Source software disk abstraction layer with snapshotting, copy-on-write, online resize and allocation and a number of additional features.

In LVM, there are Volume Groups (VG), Physical Volumes (PV), and Logical Volumes (LV).

LVM can do striping and high-availability sofware RAID.

LVM and

device-mapperare now part of the Linux kernel tree (the LVM Linux kernel modules are built and included with most distributions’ default kernel build).LVM Logical Volumes can be resized online (without e.g. rebooting to busybox or a LiveCD); but many Filesystems support only onlize grow (and not online shrink).

There is feature overlap between LVM and btrfs (pooling, snapshotting, copy-on-write).

btrfs¶

btrfs (B-tree filesystem) is an Open Source pooling, snapshotting, checksumming, deduplicating, union mounting copy-on-write on-disk Linux filesystem.

ext¶

ext2, ext3, and ext4 are the ext (extended filesystem) Open Source on-disk filesystems.

FAT¶

FAT is a group of on-disk filesystem standards.

FAT is used on cross-platform USB drives.

FAT is found on older Windows and DOS machines.

FAT12, FAT16, and FAT32 are all FAT filesystem standards.

FAT32 has a maximum filesize of 4GB and a maximum volume size of 2 TB.

Windows machines can read and write FAT partitions.

OS X machines can read and write FAT partitions.

Linux machines can read and write FAT partitions.

ISO9660¶

.isoISO9660 is an ISO standard for disc drive images which specifies a standard for booting from a filesystem image.

Many Operating System distributions are distributed as ISO9660

.isofiles.ISO9660 and Linux:

An ISO9660 ISO can be loop mounted:

mount -o loop,ro -t iso9660 ./path/to/file.iso /mnt/cdrom

An ISO8660 CD can be mounted:

mount -o ro -t iso9660 /dev/cdrom /mnt/cdrom

Most CD/DVD burning utilities support ISO9660

.isofiles.ISO9660 is useful in that it specifies how to encode the boot sector (El Torito) and partition layout.

Nowadays, ISO9660

.isofiles are often converted to raw drive images and written to bootable USB Mass Storage devices (e.g. to write a install / recovery disq for Debian, Ubuntu, Fedora, Windows)

HFS+¶

HFS+ (Hierarchical Filesystem) or Mac OS Extended, is the filesystem for Mac OS 8.1+ and OS X.

HFS+ is required for OS X and Time Machine.

https://www.cnet.com/how-to/the-best-ways-to-format-an-external-drive-for-windows-and-mac/

Windows machines can access HFS+ partitions with: HFSExplorer (free, Java), Paragon HFS+ for Windows, or MacDrive

https://www.makeuseof.com/tag/4-ways-read-mac-formatted-drive-windows/

Linux machines can access HFS+ partitions with

hfsprogs(apt-get install hfsprogs,yum install hfsprogs).

NTFS¶

NTFS is a proprietary journaling filesytem.

Windows machines since Windows NT 3.1 and Windows XP default to NTFS filesystems.

Non-Windows machines can access NTFS partitions through NTFS-3G: https://en.wikipedia.org/wiki/NTFS-3G

FUSE¶

FUSE (Filesystem in Userspace) is a userspace filesystem API for implementing filesystems in userspace.

FUSE support is included in the Linux kernel since 2.6.14.

FUSE is available for most POSIX platforms.

Interesting FUSE implementations:

PyFilesystem is a Python language api interface which supports FUSE: https://docs.pyfilesystem.org/en/latest/

Ceph can be mounted with/over/through FUSE.

GlusterFS can be mounted with/over/through FUSE.

NTFS-3G mounts volumes with FUSE.

virtualbox-fuse supports mounting of VirtualBox VDI images with FUSE.

SSHFS, GitFS, GmailFS, GdriveFS, WikipediaFS and Gnome GVFS are all FUSE filesystems.

SSHFS¶

SSHFS is a FUSE filesystem for mounting remote directories over SSH.

Network Filesystems¶

Ceph¶

Ceph is an Open Source network filesystem (a distributed database for files with attributes like owner, group, permissions) written in C++ and Perl which runs over top of one or more on-disk filesystems.

Ceph Block Device (rbd) – striping, caching, snapshots, copy-on-write, KVM, Libvirt, OpenStack Cinder block storage

Ceph Filesystem (cephfs) – POSIX filesystem with FUSE, NFS, CIFS, and HDFS APIs

Ceph Object Gateway (radosgw) – RESTful API, Amazon AWS S3 API, OpenStack Swift API, OpenStack Keystone authentication

CIFS¶

CIFS (Common Internet File System) is a centralized network filesystem protocol.

Samba

smbdis one implementation of a CIFS network file server.

DDFS¶

DDFS (Disco Distributed File System) is a distributed network filesystem written in Python and C.

GlusterFS¶

GlusterFS is an Open Source network filesystem (a distributed database for files with attributes like owner, group, permissions) which runs over top of one or more on-disk filesystems.

GlusterFS can serve volumes for OpenStack Cinder block storage

HDFS¶

HDFS (Hadoop Distributed File System) is an Open Source distributed network filesystem.

HDFS runs code next to data; rather than streaming data through code across the network.

HDFS is especially suitable for MapReduce-style distributed computation.

Apache Hadoop works with files stored over HDFS, FTP, S3, WASB (Azure)

There are HDFS language apis for many languages: Java, Scala, Go, Python, Ruby, Perl, Haskell, C++

Mesos can manage distributed HDFS grids.

It’s possible to configure a Jenkins Continuous Integration cluster as Hadoop cluster.

Many databases support storage over HDFS (HBase, Cassandra, Accumulo, Spark)

HDFS can be accessed from the commandline with the Hadoop FS shell: https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/FileSystemShell.html

HDFS can be browsed with hdfs-du: https://github.com/twitter/hdfs-du

NFS¶

NFS (Network File System #TODO) is an Open Source centralized network filesystem.

S3¶

Amazon AWS S3

OpenStack Swift

Swift¶

SMB¶

SMB (Server Message Block) is a centralized network filesystem.

SMB has been superseded by CIFS.

WebDAV¶

WebDAV (Web Distributed Authoring and Versioning) is a network filesystem protocol built with HTTP.

WebDAV specifies a number of unique HTTP methods:

PROPFIND(ls,stat,getfacl),PROPPATCH(touch,setfacl)MKCOL(mkdir)COPY(cp)MOVE(mv)LOCK(File Locking)UNLOCK()

Databases¶

https://en.wikipedia.org/wiki/Create,_read,_update_and_delete

https://en.wikipedia.org/wiki/Category:Database_software_comparisons

Object Relational Mapping¶

https://en.wikipedia.org/wiki/Object-relational_impedance_mismatch

Relation Algebra¶

See: Relational Algebra

Relational Algebra¶

Relational Databases¶

https://en.wikipedia.org/wiki/Relational_model

https://en.wikipedia.org/wiki/Database_normalization

https://en.wikipedia.org/wiki/Relational_database_management_system

What doesn’t SQL do?

SQL¶

https://en.wikipedia.org/wiki/Null_(SQL)#Comparisons_with_NULL_and_the_three-valued_logic_.283VL.29

https://en.wikipedia.org/wiki/Hierarchical_and_recursive_queries_in_SQL

SQL security:

https://cwe.mitre.org/top25/#CWE-89 (#1 Most Prevalent Dangerous Security Error (2011))

MySQL¶

MySQL Community Edition is an Open Source relational database.

PostgreSQL¶

PostgreSQL is an Open Source relational database.

PostgreSQL has native support for storing and querying JSON.

PostgreSQL has support for geographical queries (PostGIS).

SQLite¶

.sqliteSQLite is a serverless Open Source relational database which stores all data in one file.

SQLite is included in the Python standard library.

Virtuoso¶

Virtuoso Open Source edition is a multi-paradigm relational database / XML document database / RDF triplestore.

Relational Tables Data Management (Columnar or Column-Store SQL RDBMS)

Relational Property Graphs Data Management (SPARQL RDF based Quad Store)

Content Management (HTML, TEXT, Turtle, RDF/XML, JSON, JSON-LD, XML)

Web and other Document File Services (Web Document or File Server)

Five-Star Linked Open Data Deployment (RDF-based Linked Data Server)

Web Application Server (SOAP or RESTful interaction modes).

Virtuoso supports ODBC, JDBC, and DB-API relational database access.

Virtuoso powers DBpedia.

NoSQL Databases¶

https://en.wikipedia.org/wiki/Keyspace_(distributed_data_store)

Graph Databases¶

https://en.wikipedia.org/wiki/Graph_database#Graph_database_projects

Blazegraph [RDF, OWL]

Graph Queries

https://en.wikipedia.org/wiki/Graph_database#APIs_and_Graph_Query.2FProgramming_Languages

Spark GraphX

Blazegraph¶

Blazegraph is an Open Source graph database written in Java with support for Gremlin, Blueprints, RDF, RDFS and OWL inferencing, SPARQL.

Blazegraph was formerly known as Bigdata.

Blazegraph 1.5.2 supports Solr (e.g. TF-IDF) indexing.

Blazegraph will power the Wikidata Query Service (RDF, SPARQL):

https://lists.wikimedia.org/pipermail/wikidata-tech/2015-March/000740.html

MapGraph is a set of GPU-accelerations for graph processing.

Blueprints¶

Blueprints is an Open Source graph database API (and reference graph data model).

Blueprints is a collection of interfaces, implementations, ouplementations, and test suites for the property graph data model.

Blueprints is analogous to the JDBC, but for graph databases. As such, it provides a common set of interfaces to allow developers to plug-and-play their graph database backend.

Moreover, software written atop Blueprints works over all Blueprints-enabled graph databases.

Within the TinkerPop software stack, Blueprints serves as the foundational technology for:

Pipes: A lazy, data flow framework

Gremlin: A graph traversal language

Frames: An object-to-graph mapper

Furnace: A graph algorithms package

Rexster: A graph server

There are many blueprints API implementations (e.g. Rexster, Neo4j, Blazegraph, Accumulo)

Gremlin¶

Gremlin is an Open Source domain-specific language for traversing property graphs.

Gremlin works with databases that implement the Blueprints graph database API.

Neo4j¶

Neo4j is an Open Source HA graph database written in Java.

Neo4j implements the Paxos distributed algorithm for HA (high availability).

Neo4j can integrate with Spark and ElasticSearch.

Neo4j is widely deployed in production environments.

There is a Blueprints API implementation for Neo4j:

https://github.com/tinkerpop/blueprints/wiki/Neo4j-Implementation

RDF Triplestores¶

https://en.wikipedia.org/wiki/List_of_subject-predicate-object_databases

Graph Pattern Query Results

https://en.wikipedia.org/wiki/Redland_RDF_Application_Framework

SAIL (Storage and Inferencing Layer) API

rdfs:seeAlso

Distributed Databases¶

Accumulo¶

Apache Accumulo is an Open Source distributed database key/value store written in Java based on BigTable which adds realtime queries, streaming iterators, row-level ACLs and a number of additional features.

BigTable¶

Google BigTable is a open reference design for a distributed key/value column store and a proprietary production database system.

Apache Beam¶

Apache Beam is an open source batch and streaming parallel data processing framework with support for Apache Apex, Apache Flink, Apache Spark, and Google Cloud Dataflow.

Cassandra¶

Apache Cassandra is an Open Source distributed key/value super column store written in Java.

Cassandra is similar to Amazon AWS Dynamo and BigTable.

Cassandra supports MapReduce-style computation.

Cassandra supports HDFS.

Facebook is one primary supporter of Cassandra development.

Hadoop¶

Apache Hadoop is a collection of Open Source distributed computing components; particularly for MapReduce-style computation over Hadoop HDFS distributed filesystem.

HBase¶

Apache HBase is an Open Source distributed key/value super column store based on BigTable written in Java that does MapReduce-style computation over Hadoop HDFS.

HBase has a Java API, a RESTful API, an avro API, and a Thrift API

Hive¶

Apache Hive is an Open Source data warehousing platform written in Java.

Parquet¶

Apache Parqet is an Open Source columnar storage format for Distributed Databases

Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

Presto¶

Presto is an Open Source distributed query engine designed to query multiple datastores at once.

Spark¶

Apache Spark is an Open Source distributed computation platform.

Spark is in-memory; and 100x faster than MapReduce.

Spark can work with data in/over/through HDFS, Cassandra, OpenStack Swift, Amazon AWS S3, and the local filesystem.

Spark can be provisioned by YARN or Mesos.

Spark has Java, Scala, Python, and R language APIs.

Spark set a world sorting benchmark record in 2014: https://spark.apache.org/news/spark-wins-daytona-gray-sort-100tb-benchmark.html

GraphX¶

GraphX is an Open Source graph query framework built with Spark.

Distributed Algorithms¶

Distributed Databases and distributed Information Systems implement Distributed Algorithms designed to solve for Confidentiality, Integrity, and Availability.

As separate records / statements to be yield-ed or emitted:

- Distributed Databases

implement Distributed Algorithms.

- Distributed Information Systems

implement Distributed Algorithms.

See Also:

Distributed Computing Problems¶

Non-blocking algorithm¶

DHT¶

A DHT (Distributed Hash Table*) is a distributed key value store for storing values under a consistent file checksum hash which can be looked up with e.g. an exact string match.

At an API level, a DHT is a key/value store.

DNS is basically a DHT

Distributed Databases all implement some form of a structure simiar to a DHT (a replicated keystore); often for things like bloom filters (for fast search)

Browsers that maintain a local cache could implement a DHT (e.g. with WebSocket or WebRTC)

BitTorrent magnet URIs (URNs) contain a key, which is a checksum of a manifest, which can be retrieved from a DHT:

# <a href="magnet:?xt=urn:btih:IJBDPDSBT4QZLBIJ6NX7LITSZHZQ7F5I">.</a> # key_uri = "IJBDPDSBT4QZLBIJ6NX7LITSZHZQ7F5I" dht = DHT(); value = dht.get(key_uri)

Named Data Networking is also essentially a cached DHT.

MapReduce¶

MapReduce is a distributed algorithm for distributed computation.

Paxos¶

Raft¶

https://en.wikipedia.org/wiki/Raft_(computer_science)#Basics

Leader / Candidate / Follower

Heartbeat (Leader -> Followers [-> Candidates])

etcd (CoreOS, Kubernetes, Configuration Management)

skydns

Bulk Synchronous Parallel¶

Bulk Synchronous Parallel (BSP) is a distributed algorithm for distributed computation.

Google Pregel, Apache Giraph, and Apache Spark are built for a Bulk Synchronous Parallel model

MapReduce can be expressed very concisely in terms of BSP.

Distributed Computing Protocols¶

https://en.wikipedia.org/wiki/Comparison_of_data_serialization_formats

Programming Languages’ implementations:

CORBA¶

CORBA (Common Object Request Broker Architecture) is a distributed computing protocol now defined by OMG with implementations in many languages.

CORBA is a distributed object-oriented protocol for platform-neutral distributed computing.

CORBA objects are marshalled and serialized according to an IDL (Interface Definition Language) with a limited set of datatypes (see also XSD, Distributed Computing Protocols: Protocol Buffers, Thrift, Avro, MessagePack, JSON-LD)

CORBA ORBs (Object Request Brokers) route requests for objects (see also ESB)

CORBA objects are either in local address space (see also

file:////dev/mem) or remote address space (see also dereferencable HTTP, HTTPS URLs )CORBA objects can be looked up by reference (by URL, or NameService (see also DNS))

“CORBA Objects are passed by reference, while data (integers, doubles, structs, enums, etc.) are passed by value” – https://en.wikipedia.org/wiki/Common_Object_Request_Broker_Architecture#Features

Message Passing¶

ESB¶

An ESB (Enterprise Service Bus) is a centralized distributed computing component which relays (or brokers) messages with or as a message queue (MQ).

ESB is generally the name for a message queue / task worker pattern in the SOA (particularly Java).

ESBs host service endpoints for message producers and consumers.

ESBs can also maintain state, or logging.

ESB services can often be described with e.g. WSDL and/or JSON-WSP.

https://en.wikipedia.org/wiki/Category:Message-oriented_middleware

MPI¶

MPI (Message Passing Interface) is a distributed computing protocol for structured data interchange with implementations in many languages.

Many supercomputing applications are built with MPI.

MPI is faster than JSON.

IPython

ipyparallelsupports MPI: https://ipyparallel.readthedocs.io/en/latest/

XML-RPC¶

XML Remote Procedure Call defines method names with parameters and values for making function calls with XML.

Python

xmlrpclib: https://docs.python.org/2/library/xmlrpclib.html

See also:

JSON-RPC¶

Avro¶

Apache Avro is an RPC distributed computing protocol with implementations in many languages.

Avro schemas are defined in JSON.

Avro is similar to Protocol Buffers and Thrift, but does not require code generation.

Avro stores schemas within the data.

seeAlso:

Protocol Buffers¶

Protocol Buffers (PB) is a standard for structured data interchange.

Protocol Buffers are faster than JSON

See also:

Thrift¶

Thrift is a standard for structured data interchange in the style of Protocol Buffers.

Thrift is faster than JSON.

See also:

SOA¶

SOA (Service Oriented Architecture) is a collection of Web Standards (e.g WS-*) and architectural patterns for distributed computing.

WS-*¶

There are many web service specifications; many

web service specifications often start with WS-.

https://en.wikipedia.org/wiki/List_of_web_service_specifications

Many/most WS-* standards specify XML.

Some WS-* standards also specify JSON.

WSDL¶

WSDL (Web Services Description Language) is a web standard for describing web services and the schema of their inputs and outputs.

JSON-WSP¶

JSON-WSP (JSON Web-Service Protocol) is a web standard protocol for describing services and request and response objects.

See also: Linked Data Platform (LDP)

ROA¶

REST¶

REST (Representational State Transfer) is a pattern for interacting with

web resources using regular HTTP methods like GET, POST,

PUT, and DELETE.

A REST API is known as a RESTful API.

A REST implementation maps Create, Read, Update, Delete (CRUD) methods for URI-named collections of resources onto HTTP verbs like

GET,POST,PATCH.Sometimes, a REST implementation accepts a URL parameter like

?method=PUTe.g. for Javascript implementations on browsers which only support e.g.GETandPOST.There are many software libraries for implementing REST API Servers:

Java, JS: Restlet:

Wikipedia: https://en.wikipedia.org/wiki/RestletRuby: Grape:

Python: Django REST Framework:

There are many software libraries for implementing REST API Clients:

Python REST API client libraries:

requests:

httpie is a CLI utility written on top of requests:

WebTest:

WAMP¶

WAMP (Web Application Messaging Protocol) defines Publish/Subscribe (PubSub) and Remote Procedure Call (RPC) over WebSocket, JSON, and URIs

Using WAMP, you can have a browser-based UI, the embedded device and your backend talk to each other in real-time:

WAMP Router = Broker (PubSub topic broker) + Dealer (RPC)

WAMP can run on other transports (e.g. MessagePack) than the preferred WebSocket w/ JSON.

Implementations:

https://tools.ietf.org/html/draft-oberstet-hybi-tavendo-wamp#section-6.5

WAMP Message Codes and Direction

Data Grid¶

Search Engine Indexing¶

Semantic Web graph of Linked Data, : RDFa, JSON-LD, Schema.org.

ElasticSearch¶

ElasticSearch is an Open Source realtime search server written in Java built on Apache Lucene with a RESTful API for indexing JSON documents.

ElasticSearch supports geographical (bounded) queries.

ElasticSearch can build better indexes for faster search response times when ElasticSearch Mappings are specified.

ElasticSearch mappings can be (manually) transformed to JSON-LD

@contextmappings: https://github.com/westurner/elasticsearchjsonld

Haystack¶

Haystack is an Open Source Python Django API for a number of search services (e.g. Solr, ElasticSearch, Whoosh, Xapian).

Lucene¶

Apache Lucene is an Open Source search indexing service written in Java.

ElasticSearch, Nutch, and Solr are implemented on top of Lucene.

Nutch¶

Apache Nutch is an Open Source distributed web crawler and search engine written in Java and implemented on top of Lucene.

Nutch has a pluggable storage and indexing API with support for e.g. Solr, ElasticSearch.

Solr¶

Apache Solr is an Open Source web search platform written in Java and implemented on top of Lucene.

Whoosh¶

Whoosh is an Open Source search indexing service written in Python.

Xapian¶

Xapian is an Open Source search library written in C++ with bindings for many languages.

Information Retrieval¶

Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze, Introduction to Information Retrieval, Cambridge University Press. 2008.

Time Standards¶

International Atomic Time (IAT)¶

International Atomic Time (IAT) is an international standard for extremely precise time keeping; which is the basis for UTC Earth time and for Terrestrial Time (Earth and Space).

Long Now Dates¶

2015 # ISO8601 date

02015 # 5-digit Y10K date

Decimal Time¶

https://en.wikipedia.org/wiki/Leap_year (~365.25 days/yr)

https://en.wikipedia.org/wiki/Leap_second (rotation time ~= atomic time)

Unix Time¶

Defined as the number of seconds that have elapsed since 00:00:00 Coordinated Universal Time (UTC), Thursday, 1 January 1970, not counting leap seconds

Unix time is the delta in seconds since

1970-01-01T00:00:00Z, not counting leap seconds:

0 # Unix time

1970-01-01T00:00:00Z # ISO8601 timestamp

1435255816 # Unix time

2015-06-25T18:10:16Z # ISO8601 timestamp

See also: Swatch Internet Time (Beat Time)

Year Zero¶

The Gregorian Calendar (e.g. Common Era, Julian Calendar) does not include a year zero; (1 BCE is followed by 1 CE).

Astronomical year numbering includes a year zero.

Before Present dates do not specify a year zero. (because they are relative to the current (or published) date).

Astronomical year numbering¶

Astronomical year numbering includes a year zero:

Tools with support for Astronomical year numbering:

AstroPy is a Python library that supports astronomical year numbering:

Before Present (BP)¶

Before Present (BP) dates are relative to the current date (or date of publication); e.g. “2.6 million years ago”.

Common Era (CE)¶

BCE (Before Common Era) == BC

CE (Common Era) == AD (Anno Domini)

Common Era and Year Zero:

5000 BCE == -5000 CE

1 BCE == -1 CE

0 BCE == 0 CE

0 CE == 0 BCE

1 CE == 1 CE

2015 CE == 2015 CE

Note

Are these off by one?

Astronomical year numbering – you must convert from julian/gregorian dates to Astronomical year numbering.

Year Zero – they are off by one (“there is no year zero”).

Common Era and Python datetime calculations:

# Paleolithic Era (1.6m years ago -> 12000 years ago)

# "2.6m years ago" = (2.6m - (2015)) BCE = 2597985 BCE = -2597985 CE

2597985 BCE == -2597985 CE

### Python datetime w/ scientific notation string formatter

>>> import datetime

>>> year = datetime.datetime.now().year

>>> '{:.6e}'.format(2.6e6 - year)

'2.597985e+06'

### Python datetime supports (dates >= 1 BCE).

>>> datetime.date(1, 1, 1)

datetime.date(1, 1, 1)

>>> datetime.datetime(1, 1, 1)

>>> datetime.datetime(1, 1, 1, 0, 0)

### Python pypi:arrow supports (dates >= 1 BCE).

>>> !pip install arrow

>>> arrow.get(1, 1, 1)

<Arrow [0001-01-01T00:00:00+00:00]>

### astropy.time.Time supports (1 BCE <= dates >= 1 CE) and/or *Year Zero*

### https://astropy.readthedocs.io/en/latest/time/

>>> !conda install astropy

>>> import astropy.time

>>> # TimeJulianEpoch (Julian date (jd) ~= Common Era (CE))

>>> astropy.time.Time(-2.6e6, format='jd', scale='utc')

<Time object: scale='utc' format='jd' value=-2600000.0>

Time Zones¶

https://en.wikipedia.org/wiki/Daylight_saving_time

https://en.wikipedia.org/wiki/List_of_UTC_time_offsets

https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

UTC¶

UTC (Coordinated Universal Time) is the primary terrestrial Earth-based clock time.

Earth Time Zones are specified as offsets from UTC.

UTC time is set determined by International Atomic Time (IAT); with occasional leap seconds to account for the difference between Earth’s rotational time and the actual passage of time according to the decay rate of cesium atoms (an SI Unit calibrated with an atomic clock; see QUDT).

Many/most computer systems work with UTC, but are not exactly synchronized with International Atomic Time (IAT) (see also: RTC, NTP and time drift).

US Time Zones¶

https://en.wikipedia.org/wiki/Time_in_the_United_States#Standard_time_and_daylight_saving_time

https://en.wikipedia.org/wiki/History_of_time_in_the_United_States

Time Zone names, URIs, and ISO8601 UTC offsets:

Time Zone names, URNs, URIs |

UTC Offset |

UTC DST Offset |

https://en.wikipedia.org/wiki/Coordinated_Universal_Time #tz: Coordinated Universal Time, UTC, Zulu |

-0000 Z |

+0000 Z |

https://en.wikipedia.org/wiki/Atlantic_Time_Zone https://en.wikipedia.org/wiki/America/Halifax #tz: Atlantic, Antarctica (Palmer), AST, ADT America/Halifax |

-0400 AST |

-0300 ADT |

https://en.wikipedia.org/wiki/America/St_Thomas #tz: America/St_Thomas, America/Virgin |

-0400 |

-0400 |

https://en.wikipedia.org/wiki/Eastern_Time_Zone https://en.wikipedia.org/wiki/EST5EDT #tz: Eastern, EST, EDT America/New_York |

-0500 EST |

-0400 EDT |

https://en.wikipedia.org/wiki/Central_Time_Zone https://en.wikipedia.org/wiki/CST6CDT #tz: Central, CST, CDT America/Chicago |

-0600 CST |

-0500 CDT |

https://en.wikipedia.org/wiki/Mountain_Time_Zone https://en.wikipedia.org/wiki/MST7MDT #tz: Mountain, MST, MDT America/Denver |

-0700 MST |

-0600 MDT |

https://en.wikipedia.org/wiki/Pacific_Time_Zone https://en.wikipedia.org/wiki/PST8PDT #tz: Pacific, PST, PDT America/Los_Angeles |

-0800 PST |

-0700 PDT |

https://en.wikipedia.org/wiki/Alaska_Time_Zone AKST9AKDT #tz: Alaska, AKST, AKDT America/Juneau |

-0900 AKST |

-0800 AKDT |

https://en.wikipedia.org/wiki/Hawaii-Aleutian_Time_Zone HAST10HADT #tz: Hawaii Aleutian, HAST, HADT Pacific/Honolulu |

-1000 HAST |

-0900 HADT |

https://en.wikipedia.org/wiki/Samoa_Time_Zone #tz: Samoa Time Zone, SST Pacific/Samoa |

-1100 SST |

-1100 SST |

https://en.wikipedia.org/wiki/Chamorro_Time_Zone #tz: Chamorro, Guam Pacific/Guam |

+1000 |

+1000 |

https://en.wikipedia.org/wiki/Time_in_Antarctica Antarctica (Amundsen, McMurdo), South Pole Antarctica/South_Pole |

+1200 |

+1300 |

US Daylight Saving Time¶

Currently, daylight saving time starts on the second Sunday in March and ends on the first Sunday in November, with the time changes taking place at 2:00 a.m. local time.

With a mnemonic word play referring to seasons, clocks “spring forward and fall back” — that is, in spring (technically late winter) the clocks are moved forward from 2:00 a.m. to 3:00 a.m., and in fall they are moved back from 2:00 am to 1:00 am.

Daylight Savings Time Starts and Ends on the following dates (from https://en.wikipedia.org/wiki/Time_in_the_United_States#Daylight_saving_time_(DST)):

Year |

DST start date |

DST end date |

2015 |

2015-03-08 02:00 |

2015-11-01 02:00 |

2016 |

2016-03-13 02:00 |

2016-11-06 02:00 |

2017 |

2017-03-12 02:00 |

2017-11-05 02:00 |

2018 |

2018-03-11 02:00 |

2018-11-04 02:00 |

2019 |

2019-03-10 02:00 |

2019-11-03 02:00 |

2020 |

2020-03-08 02:00 |

2020-11-01 02:00 |

2021 |

2019-03-14 02:00 |

2019-11-07 02:00 |

2022 |

2020-03-13 02:00 |

2020-11-06 02:00 |

2023 |

2019-03-12 02:00 |

2019-11-05 02:00 |

2024 |

2020-03-10 02:00 |

2020-11-03 02:00 |

2025 |

2019-03-09 02:00 |

2019-11-02 02:00 |

2026 |

2020-03-08 02:00 |

2020-11-01 02:00 |

2027 |

2019-03-14 02:00 |

2019-11-07 02:00 |

2028 |

2020-03-12 02:00 |

2020-11-05 02:00 |

2029 |

2019-03-11 02:00 |

2019-11-04 02:00 |

ISO8601¶

ISO8601 is an ISO standard for specifying Gregorian dates, times, datetime intervals, durations, and recurring datetimes.

The

datecommand can print ISO8601 -compatible datestrings:$ date +'%FT%T%z' 2016-01-01T22:11:59-0600 $ date +'%F %T%z' 2016-01-01 22:11:59-0600 $ date -Iseconds --utc # GNU date 2020-03-26T03:35:24+00:00 $ date -Is -u # GNU date 2020-03-26T03:35:24+00:00

Roughly, an ISO8601 datetime is specified as: year, dash month, dash day, (

Tor `` `` [space-character]), hour, colon, minute, colon, second, (Z[for UTC] or a time zone offset (e.g.+/--0000,+0000)); where the dashes and colons are optional.ISO8601 specifies a standard for absolute time durations: start date, forward-slash, end date.

ISO8601 specifies a standard for relative time durations: number of years

Y, monthsM, daysD, hoursH, minutesM, and secondsS.A

Ztimezone specifies UTC (Universal Coordinated Time) (or “Zulu”) time.Many/most W3C standards (such as XSD) specify ISO8601 time formats: https://www.w3.org/TR/NOTE-datetime

A few examples of ISO8601:

2014

2014-10

2014-10-23

20141023

2014-10-23T20:59:30+Z # UTC / Zulu

2014-10-23T20:59:30Z # UTC / Zulu

2014-10-23T20:59:30-06:00 # CST

2014-10-23T20:59:30-06 # CST

2014-10-23T20:59:30-05:00 # CDT

2014-10-23T20:59:30-05 # CDT

20

20:59

2059

20:59:30

205930

2014-10-23T20:59:30Z/2014-10-23T21:00:00Z

2014-10-23T20:59:30-05:00/2014-10-23T21:00:00-06

PT1H

PT1M

P1M

P1Y1M1W1DT1H1M1S

Note

AFAIU, ISO8601 does not specify standards for milliseconds, microseconds, nanoseconds, picoseconds, femtoseconds, or attoseconds.

NTP¶

NTP (Network Time Protocol) is a standard for synchronizing clock times.

Most Operating Systems and mobile devices support NTP.

NTP clients calculate time drift (or time skew) and network latency and then gradually adjust the local system time to the most recently retrieved server time.

Many OS distributions run their own NTP servers (in order to reduce load on the core NTP pool servers).

Linked Data¶

Linked Data Standards:

5 ★ Linked Data¶

https://www.w3.org/TR/ld-glossary/#x5-star-linked-open-data

☆

Publish data on the Web in any format (e.g., PDF, JPEG) accompanied by an explicit Open License (expression of rights).

☆☆

Publish structured data on the Web in a machine-readable format (e.g. XML).

☆☆☆

Publish structured data on the Web in a documented, non-proprietary data format (e.g. CSV, KML).

☆☆☆☆

Publish structured data on the Web as RDF (e.g. Turtle, RDFa, JSON-LD, SPARQL.)

☆☆☆☆☆

In your RDF, have the identifiers be links (URLs) to useful data sources.

See: Semantic Web

Semantic Web¶

https://en.wikipedia.org/wiki/Template:Semantic_Web

https://en.wikipedia.org/wiki/Semantics_(computer_science)

W3C Semantic Web Wiki:

Semantic Web Standards¶

https://en.wikipedia.org/wiki/Statement_(computer_science)

https://en.wikipedia.org/wiki/Resource_(computing)

https://en.wikipedia.org/wiki/Entity-attribute-value_model

https://en.wikipedia.org/wiki/Tuple

https://en.wikipedia.org/wiki/Reification_(computer_science)#Reification_on_Semantic_Web

https://en.wikipedia.org/w/index.php?title=Eigenclass_model&oldid=592778140#In_RDF_Schema

Representations / Serializations

Vocabularies

RDFS: DCMI, SKOS, Schema.org

Query APIS

Ontologies

Reasoners

See:

OWL 2 Profiles

Web Standards¶

Web Names¶

URL¶

URI¶

URN¶

IEC¶

IEC (International Electrotechnical Commission) is a standards body.

List of IEC standards: https://en.wikipedia.org/wiki/List_of_IEC_standards

IETF¶

IETF (Internet Engineering Task Force) is a standards body.

List of IETF standards: https://tools.ietf.org/html/

ISO¶

ISO (International Organization for Standardization) is a standards body.

List of ISO standards: https://www.iso.org/iso/home/standards.htm

OMG¶

OMG (Object Management Group) is a standards body.

UML is an OMG standard.

CORBA is now an OMG standard.

List of OMG standards: https://www.omg.org/spec/

https://en.wikipedia.org/wiki/Object_Management_Group#OMG_Standards

W3C¶

W3C (World Wide Web Consortium) is a standards body.

List of W3C standards / “Technical Recommendations”: https://www.w3.org/TR/

HTTP¶

HTTP (HyperText Transfer Protocol) is an Open Source text-based request-response TCP/IP protocol for text and binary data interchange.

HTTPS (Secure HTTP) wraps HTTP in SSL/TLS to secure HTTP.

HTTP in RDF¶

@prefix http: <https://www.w3.org/2011/http#> .@prefix http-headers: <https://www.w3.org/2011/http-headers> .@prefix http-methods: <https://www.w3.org/2011/http-methods> .@prefix http-statusCodes: <https://www.w3.org/2011/http-statusCodes> .HTTPS¶

HTTPS (HTTP over SSL) is HTTP wrapped in TLS/SSL.

TLS (Transport Layer Security)

SSL (Secure Sockets Layer)

HTTP STS¶

HTTP STS (HTTP Strict Transport Security) is a standardized extension for notifying browsers that all requests should be made over HTTPS indefinitely or for a specified time period.

See also: HTTPS Everywhere

CSS¶

CSS (Cascading Style Sheets) define the presentational aspects of HTML and a number of mobile and desktop web framworks.

CSS is designed to ensure separation of data and presentation. With javascript, the separation is then data, code, and presentation.

RTMP¶

RTMP is a TCP/IP protocol for streaming audio, video, and data originally for Flash which is now Open Source.

https://en.wikipedia.org/wiki/Real_Time_Messaging_Protocol#Client_software

Adobe Flash Player

https://en.wikipedia.org/wiki/Real_Time_Messaging_Protocol#Server_software

Adobe Flash Live Media Server

Amazon AWS S3 HTTP Object Storage, CloudFront CDN

Helix Universal Media Server

Red5 (Open Source)

FreeSwitch (OpenSource, VoIP, SIP, Video Chat)

WebRTC solves for all of the RTMP use cases, and is becoming as or more widely deployed than Flash Player (especially with mobile devices).

WebSocket¶

ws://WebSocket is a full-duplex (two-way) TCP/IP protocol for audio, video, and data which can interoperate with HTTP Web Servers.

WebSockets are often more efficient than other methods for realtime HTTP like HTTP Streaming and long polling.

WebSockets work with many/most HTTP proxies

https://en.wikipedia.org/wiki/Comparison_of_WebSocket_implementations

Python: pypi:gevent-websocket, pypi:websockets (asyncio), pypi:autobahn (pypi:twisted, asyncio)

See also: https://en.wikipedia.org/wiki/Push_technology, WebRTC

WebRTC¶

WebRTC is a web standard for decentralized or centralized streaming of audio, video, and data in browser, without having to download any plugins.

Note

WebRTC is supported by most browsers: http://iswebrtcreadyyet.com/

HTTP/2¶

HTTP/2 (HTTP2) is the newest standard for HTTP.

HTTP/2 is largely derived from the SPDY protocol.

HTML¶

HTML (HyperText Markup Language) is a Open Source standard for representing documents with tags, attributes, and hyperlinks.

Recent HTML standards include HTML4, XHTML, and HTML5.

HTML4¶

HTML4 is the fourth generation HTML standard.

XHTML¶

XHTML is an XML-conforming HTML standard which is being superseded by HTML5.

Compared to HTML4,

XHTML requires closing tags, suports additional namespace declarations,

and expects things to be wrapped in CDATA blocks,

among a few other notable differences.

XHTML has not gained the widespread adoption of HTML4, and is being largely superseded by HTML5.

HTML5¶

HTML5 is the fifth generation HTML standard with many new (and removed) features.

Like its predecessors, HTML5 is not case sensitive, but it is recommended to use lowercased tags and attributes.

Differences Between HTML4 and HTML5

https://html-differences.whatwg.org/

HTML5 does not require closing tags (many browsers had already implemented routines for auto-closing broken markup).

Frames have been removed

Presentational attributes have been removed (in favor of CSS)

HTML 5.1

HTML 5.1 is in the works:

XML¶

XML (Extensible Markup Language) is a standard for representing data with tags and attributes.

Like PDF, XML is derived from SGML.

XSD¶

@prefix xsd: <https://www.w3.org/2001/XMLSchema#> .XSD (XML Schema Datatypes) are standard datatypes for things like strings, integers, floats, and dates for XML and also RDF.

JSON¶

JSON (JavaScript Object Notation) is a standard for representing data in a JavaScript compatible way; with a restricted set of data types.

Conforming JSON does not contain JavaScript code, only data.

It is not safe to eval JSON, because it could contain code.

There are many parsers for JSON.

CSV¶

CSV (Comma Separated Values) as a flat file representation for columnar data with rows and columns.

Most spreadsheet tools can export (raw and computed) data from a sheet into a CSV file, for use with many other tools.

CSVW¶

@prefix csvw: <https://www.w3.org/ns/csvw#> .CSVW (CSV on the Web) is a set of relatively new standards for representing CSV rows and columns as RDF (and JSON / JSON-LD) along with metadata.

RDF¶

@prefix rdf: <https://www.w3.org/1999/02/22-rdf-syntax-ns#> .RDF (Resource Description Framework) is a standard data model for representing data as triples.

Primer

Concepts

Useful Resources

“Linked Data Patterns: A pattern catalogue for modelling, publishing, and consuming Linked Data” https://patterns.dataincubator.org/book/

See also: RDF Triplestores

RDF Interfaces¶

RDF Interfaces is an Open Source standard for RDF APIs (e.g. as implemented by RDF libraries and RDF Triplestores.

createBlankNode–>BlankNodecreateNamedNode–>NamedNodecreateLiteral–>LiteralcreateTriple–>Triple(RDFNodes,RDFNodep,RDFNode, o)createGraph–>[]TriplecreateAction–>TripleAction(TripleFilter,TripleCallback)createProfile–>ProfilecreateTermMap–>TermMapcreatePrefixMap–>PrefixMap

Implementations of RDF Interfaces:

Javascript and/or Node.js implementations of RDF Interfaces:

https://www.w3.org/community/rdfjs/wiki/Comparison_of_RDFJS_libraries

RDFLib (Python) mappings to RDF Interfaces:

BlankNode->rdflib.term.BNodeNamedNode->rdflib.term.URIRef,rdflib.term.Variable? TODOLiteral->rdflib.term.LiteralTriple->tuple()Graph->rdflib.graph.Graph,rdflib.graph.ConjunctiveGraph,rdflib.graph.QuotedGraph,list()Action-> _____ TODOTripleFilter/TripleCallback->rdflib.store.TripleAddedEvent`, ``rdflib.store.TripleRemovedEventhttps://rdflib.readthedocs.io/en/latest/apidocs/rdflib.html#rdflib.term.Node

Profile-> ______ TODOTermMap-> ____ TODOPrefixMap->rdflib.namespace.NamespaceManagerhttps://rdflib.readthedocs.io/en/latest/apidocs/rdflib.html#rdflib.namespace.NamespaceManager

Note

rdflib is not order-preserving at this time, because internally Graphs are represented as

dictand not yetcollections.OrderedDict(for which there is a now C-implementation in the Python 3.5 standard library); so output may not be in the same sequence as input (or ardflib.store.Store, even) even when there are no changes made to the graph.It would be preferable to maintain the input source order (though, especially for large distributed queries which merge triples into one context, sorted / source order is not a good assumption to make).

rdf:Listare ordered.rdf:Listwith Turtle / N3::examplePredicate [ "uno"@es, "one"@en ] ;)rdf:first,rdf:rest,rdf:nil: “RDFS does not require that there be only one first element of a list-like structure, or even that a list-like structure have a first element.”

rdf:Listwith JSON-LD@context:{"@context": {"attr": {"@container": "@list"}}}{"attr": {"@list": ["one", "uno"]}}

N-Triples¶

.ntapplication/n-triplesN-Triples is a standard for serializing RDF triples to text.

RDF/XML¶

TriX¶

TriX is a standard which extends the RDF/XML RDF serialization standard with named graphs.

N3¶

.n3text/n3N3 (Notation3) is a standard which extends the Turtle RDF serialization standard with a few extra features.

=>implies (useful for specifying production rules)

Turtle¶

Turtle is a standard for serializing RDF triples into human-readable text.

TriG¶

.trigapplication/trigTriG (…) extends the Turtle RDF standard to allow multiple named graphs to be expressed in one file (as triples with a named graph IRI (“quads”)).

Triples without a specified named graph are, by default, part of the “Default Graph”.

RDFa¶

RDFa (RDF in attributes) is a standard for storing structured data (RDF triples) in HTML, (XHTML, HTML5) attributes.

Schema.org structured data can be included in an HTML page as RDFa.

RDFa 1.1 Core Context¶

The RDFa 1.1 Core Context defines a number of commonly used vocabulary namespaces and URIs (prefix mappings).

An example RDFa HTML5 fragment with vocabularies drawn from the RDFa 1.1 Core Context:

<div vocab="schema: http://schema.org/">

<div typeof="schema:Thing">

<span property="schema:name">RDFa 1.1 JSON-LD Core Context</span>

<a property="schema:url">https://www.w3.org/2013/json-ld-context/rdfa11</a>

</div>

</div>

An example JSON-LD document with the RDFa 1.1 Core Context:

{"@context": "https://www.w3.org/2013/json-ld-context/rdfa11",

"@graph": [

{"@type": "schema:Thing"

"schema:name": "RDFa 1.1 JSON-LD Core Context",

"schema:url": "https://www.w3.org/2013/json-ld-context/rdfa11"}

]}

Note

Schema.org is included in the RDFa 1.1 Core Context.

Schema.org does, in many places, reimplement other vocabularies e.g. for consistency with Schema.org/DataType s like schema.org/Number.

There is also Schema.org RDF,

which, for example maps schema:name to rdfs:label;

and OWL.

JSON-LD¶

JSON-LD (JSON Linked Data) is a standard for expressing RDF Linked Data as JSON.

JSON-LD specifies a @context for regular JSON documents

which maps JSON attributes to URIs with datatypes and, optionally, languages.

RDFS¶

@prefix rdfs: <https://www.w3.org/2000/01/rdf-schema#> .RDFS (RDF Schema) is an RDF standard for classes and properties.

A few notable RDFS classes:

rdfs:Resource(everything in RDF)rdfs:Literal(strings, integers)rdfs:Class

A few notable / frequently used properties:

rdfs:labelrdfs:commentrdfs:seeAlsordfs:domainrdfs:rangerdfs:subPropertyOf

OWL builds upon many RDFS concepts.

DCMI¶

@prefix dcterms: <http://purl.org/dc/terms> .@prefix dctypes: <http://purl.org/dc/dcmitype/> .DCTYPES (Dublin Core Types) and DCTERMS (Dublin Core Terms) are standards for common types, classes, and properties that have been mapped to XML and RDF.

EARL¶

@prefix earl: https://www.w3.org/ns/earl#W3C EARL (Evaluation and Reporting Language) is an RDFS vocabulary for automated, semi-automated, and manual test results.

The JSON-LD Implementation test results are expressed with EARL:

RDF Data Cubes¶

@prefix qb: <http://purl.org/linked-data/cube#> .RDF Data Cubes vocabulary is an RDF standard vocabulary for expressing linked multi-dimensional statistical data and aggregations.

Data Cubes have dimensions, attributes, and measures

Pivot tables and crosstabulations can be expressed with RDF Data Cubes vocabulary

SKOS¶

@prefix skos: <https://www.w3.org/2004/02/skos/core#> .SKOS (Simple Knowledge Organization System) is an RDF standard vocabulary for linking concepts and vocabulary terms.

XKOS¶

@prefix xkos: <http://rdf-vocabulary.ddialliance.org/xkos#> .XKOS (Extended Knowledge Organization System) is an RDF standard which extends SKOS for linking concepts and statistical measures.

FOAF¶

@prefix foaf: <http://xmlns.com/foaf/0.1/> .FOAF (Friend of a Friend) is an RDF standard vocabulary for expressing social networks and contact information.

SHACL¶

@prefix sh: <https://www.w3.org/ns/shacl#> .W3C SHACL (Shapes Constraint Language) is a language for describing RDF and RDFS graph shape constraints.

SHACL relaxes specific RDFS restrictions: https://www.w3.org/TR/shacl/#shacl-rdfs

Required RDFS / OWL Entailment can be specified in SHACL with the

sh:entailmentproperty and e.g. SPARQL 1.1 entailment IRIs. https://www.w3.org/TR/shacl/#entailment

SIOC¶

@prefix sioc: <http://rdfs.org/sioc/ns#> .SIOC (Semantically Interlinked Online Communities) is an RDF standard for online social networks and resources like blog, forum, and mailing list posts.

OA¶

@prefix oa: <https://www.w3.org/ns/oa#> .OA (Open Annotation) is an RDF standard for commenting on anything with a URI.

Features:

Web Annotation: https://en.wikipedia.org/wiki/Web_annotation

Comment on any resource with a (stable) URI

Comment on text fragments

Comment on SVG items

Implementations:

Schema.org¶

Schema.org is a vocabulary for expressing structured data on the web.

Schema.org can be expressed as microdata, RDF, RDFa, and JSON-LD.

.

“Schema.org: Evolution of Structured Data on the Web” (2015) https://queue.acm.org/detail.cfm?id=2857276

“Evolving Schema.org in Practice Pt1: The Bits and Pieces” (2016) https://dataliberate.com/2016/02/evolving-schema-org-in-practice-pt1-the-bits-and-pieces/

Note

The https://schema.org/ site is served over HTTPS, but the schema.org terms are HTTP URIs

Schema.org RDF¶

@prefix schema: <http://schema.org/> .SPARQL¶

SPARQL is a text-based query and update language for RDF triples (and quads).

Challenges:

SPARQL query requests and responses are over HTTP; however, it’s best – and often required – to build SPARQL queries with a server application, on behalf of clients.

SPARQL default

LIMITclauses and paging windows could allow for more efficient cachingSee: LDP for more of a resource-based RESTful API that can be implemented on top of the graph pattern queries supported by SPARQL.

LDP¶

@prefix ldp: <https://www.w3.org/ns/ldp#> .LDP (Linked Data Platform) is a standard for building HTTP REST APIs for RDF Linked Data.

Features:

HTTP REST API for Linked Data Platform Containers (LDPC) containing Linked Data Plaform Resources (LDPR)

Server-side Paging

OWL¶

@prefix owl: <https://www.w3.org/2002/07/owl#> .OWL (Web Ontology Language) layers semantics, reasoning, inference, and entailment capabilities onto RDF (and general logical set theory).

https://www.w3.org/TR/owl2-quick-reference/#Names.2C_Prefixes.2C_and_Notation

https://www.w3.org/TR/owl2-quick-reference/#OWL_2_constructs_and_axioms

A few notable OWL classes:

owl:Classaowl:Class;rdfs:subClassOfrdfs:Class(RDFS)owl:Thingaowl:Class– universal classowl:Nothingaowl:Class– empty classowl:Restrictionardfs:Class;rdfs:subClassOfowl:Class

A few OWL Property types:

owl:DatatypePropertyowl:ObjectPropertyowl:ReflexivePropertyowl:IrreflexivePropertyowl:SymmetricPropertyowl:TransitivePropertyowl:FunctionalPropertyowl:InverseFunctionalPropertyowl:OntologyPropertyowl:AnnotationPropertyowl:AsymmetricProperty

https://en.wikipedia.org/wiki/Cardinality

owl:minCardinalityowl:cardinalityowl:maxCardinality

.

owl:intersectionOfowl:unionOfowl:complementOfowl:oneOf

.

owl:allValuesFromowl:someValuesFrom

.

PROV¶

@prefix prov: <https://www.w3.org/ns/prov#> .PROV (Provenance) ontology is an OWL RDF standard for expressing data provenance (who, what, when, and how, to a certain extent).

DBpedia¶

@prefix dbpedia-owl: <http://dbpedia.org/ontology/> .DBpedia is an OWL RDF vocabulary for expressing structured data from Wikipedia sidebar infoboxes.

DBpedia is currently the most central (most linked to and from) RDF vocabulary. (see: LODCloud)

Example:

DBpedia is generated by batch extraction on a regular basis.

QUDT¶

@prefix qudt: <http://qudt.org/schema/qudt#> .@prefix qudt-1.1: <http://qudt.org/1.1/schema/qudt#> .@prefix qudt-2.1: <http://qudt.org/2.1/schema/qudt#> .QUDT (Quantities, Units, Dimensions, and Types) is an RDF standard vocabulary for representing physical units.

QUDT is composed of a number of sub-vocabularies

QUDT maintains conversion factors for Metric and Imperial Units

Examples:

qudt:SpaceAndTimeUnitqudt:SpaceAndTimeUnit rdf:type owl:Class ; rdfs:label "Space And Time Unit"^^xsd:string ; rdfs:subClassOf qudt:PhysicalUnit ; rdfs:subClassOf [ rdf:type owl:Restriction ; owl:hasValue "UST"^^xsd:string ; owl:onProperty qudt:typePrefix ] .

QUDT Namespaces:

@prefix qudt: <http://qudt.org/2.1/schema/qudt#> . @prefix qudt-dimension: <http://qudt.org/2.1/vocab/dimensionvector#> . @prefix qudt-quantity: <http://qudt.org/2.1/vocab/quantitykind#> . @prefix qudt-unit: <http://qudt.org/2.1/vocab/unit#> . @prefix unit: <http://qudt.org/2.1/vocab/unit#> .

This diagram explains how each of the vocabularies are linked and derived: https://github.com/qudt/qudt-public-repo#overview

QUDT Quantities¶

Schema

@prefix quantity: <http://data.nasa.gov/qudt/owl/quantity#> .Vocabulary

@prefix qudt-quantity: <http://qudt.org/1.1/vocab/quantity#> .QUDT Quantities is an RDF schema and vocabulary for describing physical quantities.

Examples from http://qudt.org/1.1/vocab/OVG_quantities-qudt-(v1.1).ttl :

qudt-quantity:Timequdt-quantity:Time rdf:type qudt:SpaceAndTimeQuantityKind ; rdfs:label "Time"^^xsd:string ; qudt:description "Time is a basic component of the measuring system used to sequence events, to compare the durations of events and the intervals between them, and to quantify the motions of objects."^^xsd:string ; qudt:symbol "T"^^xsd:string ; skos:exactMatch <http://dbpedia.org/resource/Time> . # ... unit:SecondTime qudt:quantityKind qudt-quantity:Time .

qudt-quantity:AreaTimeTemperaturequdt-quantity:AreaTimeTemperature rdf:type qudt:ThermodynamicsQuantityKind ; rdfs:label "Area Time Temperature"^^xsd:string . # ... unit:SquareFootSecondDegreeFahrenheit qudt:quantityKind qudt-quantity:AreaTimeTemperature .

QUDT Units¶

@prefix unit: <http://qudt.org/1.1/vocab/unit> .@prefix qudt-unit-1.1: <http://qudt.org/1.1/vocab/unit#> .The QUDT Units Ontology is an RDF vocabulary defining many units of measure.

Examples:

unit:SecondTimeunit:SecondTime rdf:type qudt:SIBaseUnit , qudt:TimeUnit ; rdfs:label "Second"^^xsd:string ; qudt:abbreviation "s"^^xsd:string ; qudt:code "1615"^^xsd:string ; qudt:conversionMultiplier "1"^^xsd:double ; qudt:conversionOffset "0.0"^^xsd:double ; qudt:symbol "s"^^xsd:string ; skos:exactMatch <http://dbpedia.org/resource/Second> . # ...

http://www.qudt.org/qudt/owl/1.0.0/unit/Instances.html#SecondTime

unit:HorsepowerElectrichttp://qudt.org/1.1/vocab/OVG_units-qudt-(v1.1).ttl

unit:HorsepowerElectric rdf:type qudt:NotUsedWithSIUnit , qudt:PowerUnit ; rdfs:label "Horsepower Electric"^^xsd:string ; qudt:abbreviation "hp/V"^^xsd:string ; qudt:code "0815"^^xsd:string ; qudt:symbol "hp/V"^^xsd:string .

unit:SystemOfUnits_SIhttp://qudt.org/1.1/vocab/OVG_units-qudt-(v1.1).ttl

unit:SystemOfUnits_SI rdf:type qudt:SystemOfUnits ; rdfs:label "International System of Units"^^xsd:string ; qudt:abbreviation "SI"^^xsd:string ; qudt:systemAllowedUnit unit:ArcMinute , unit:Day , unit:MinuteTime , unit:DegreeAngle , unit:ArcSecond , unit:ElectronVolt , unit:RevolutionPerHour , unit:Femtometer , unit:DegreePerSecond , unit:DegreeCelsius , unit:Liter , unit:MicroFarad , unit:AmperePerDegree , unit:RevolutionPerMinute , unit:MicroHenry , unit:Kilometer , unit:Revolution , unit:Hour , unit:PicoFarad , unit:Gram , unit:DegreePerSecondSquared , unit:MetricTon , unit:CubicCentimeter , unit:SquareCentimeter , unit:CubicMeterPerHour , unit:KiloPascal , unit:DegreePerHour , unit:UnifiedAtomicMassUnit , unit:MilliHenry , unit:KilogramPerHour , unit:KiloPascalAbsolute , unit:NanoFarad , unit:RadianPerMinute , unit:RevolutionPerSecond ; qudt:systemBaseUnit unit:Kilogram , unit:Unitless , unit:Kelvin , unit:Meter , unit:SecondTime , unit:Mole , unit:Candela , unit:Ampere ; qudt:systemCoherentDerivedUnit unit:PerCubicMeter , unit:WattPerSquareMeter , unit:Volt , unit:WattPerMeterKelvin , unit:CoulombPerCubicMeter , unit:Becquerel , unit:WattPerSquareMeterSteradian , unit:KelvinPerSecond , unit:Gray , unit:RadianPerSecond , unit:VoltPerMeter , unit:HenryPerMeter , unit:WattPerSteradian , unit:JouleMeterPerMole , unit:CoulombMeter , unit:PerTeslaMeter , unit:Pascal , unit:LumenPerWatt , unit:KilogramMeterPerSecond , unit:SquareMeterKelvin , unit:MoleKelvin , unit:MeterKelvinPerWatt , unit:Steradian , unit:AmperePerMeter , unit:SquareMeterKelvinPerWatt , unit:JouleSecond , unit:MeterPerFarad , unit:KilogramPerSecond , unit:HertzPerTesla , unit:KilogramMeterSquared , unit:WattPerSquareMeterQuarticKelvin , unit:PerMeterKelvin , unit:JoulePerCubicMeterKelvin , unit:JoulePerSquareTesla , unit:JoulePerCubicMeter , unit:MeterPerKelvin , unit:AmperePerSquareMeter , unit:CubicCoulombMeterPerSquareJoule , unit:CoulombPerMeter , unit:Katal , unit:CubicMeter , unit:LumenSecond , unit:Coulomb , unit:MolePerKilogram , unit:CubicMeterPerKilogramSecondSquared , unit:PerMeter , unit:AmperePerRadian , unit:CoulombPerKilogram , unit:QuarticCoulombMeterPerCubicEnergy , unit:Tesla , unit:JoulePerKilogram , unit:MeterKelvin , unit:MeterPerSecond , unit:NewtonMeter , unit:CandelaPerSquareMeter , unit:Siemens , unit:CoulombSquareMeter , unit:KilogramPerCubicMeter , unit:KilogramSecondSquared , unit:Watt , unit:AmperePerJoule , unit:VoltPerSecond , unit:JoulePerKilogramKelvinPerCubicMeter , unit:PascalPerSecond , unit:CubicMeterPerMole , unit:KilogramPerMeter , unit:PascalSecond , unit:Joule , unit:HertzPerVolt , unit:KilogramPerSquareMeter , unit:PerTeslaSecond , unit:MolePerCubicMeter , unit:PerSecond , unit:JoulePerKelvin , unit:RadianPerSecondSquared , unit:Newton , unit:CubicMeterPerKelvin , unit:GrayPerSecond , unit:SquareMeterPerSecond , unit:CubicMeterPerKilogram , unit:KilogramPerMole , unit:SquareMeterPerKelvin , unit:SquareMeterSteradian , unit:TeslaSecond , unit:Ohm , unit:KelvinPerWatt , unit:JoulePerKilogramKelvinPerPascal , unit:WattSquareMeter , unit:MeterKilogram , unit:WattSquareMeterPerSteradian , unit:Hertz , unit:VoltPerSquareMeter , unit:CubicMeterPerSecond , unit:JoulePerMoleKelvin , unit:TeslaMeter , unit:JoulePerMole , unit:Lux , unit:FaradPerMeter , unit:PerMole , unit:JouleSecondPerMole , unit:AmpereTurnPerMeter , unit:VoltMeter , unit:SecondTimeSquared , unit:AmpereTurn , unit:JoulePerKilogramKelvin , unit:CoulombPerSquareMeter , unit:NewtonPerKilogram , unit:JoulePerSquareMeter , unit:Weber , unit:Henry , unit:MeterPerSecondSquared , unit:KilogramKelvin , unit:Sievert , unit:NewtonPerMeter , unit:WattPerSquareMeterKelvin , unit:SquareCoulombMeterPerJoule , unit:Lumen , unit:Farad , unit:HertzPerKelvin , unit:SquareMeter , unit:JoulePerTesla , unit:Radian , unit:KelvinPerTesla , unit:NewtonPerCoulomb , unit:CoulombPerMole ; qudt:systemPrefixUnit unit:Hecto , unit:Nano , unit:Tera , unit:Atto , unit:Kilo , unit:Yocto , unit:Yotta , unit:Deci , unit:Zepto , unit:Pico , unit:Femto , unit:Milli , unit:Micro , unit:Zetta , unit:Mega , unit:Centi , unit:Giga , unit:Peta , unit:Deca , unit:Exa ; skos:exactMatch <http://dbpedia.org/resource/International_System_of_Units> .

Wikidata¶

Wikidata is an Open Source collaboratively edited knowledgebase.

Semantic Web Tools¶

Semantic Web Tools are designed to work with RDF formats.

See also: RDF Triplestores

CKAN¶

CKAN (Comprehensive Knowledge Archive Network) is an Open Source data repository web application and API written in Python with support for RDF.

https://datahub.io is powered by CKAN. LODCloud draws from datahub.io datasets.

Many national data.gov sites are powered by CKAN. (e.g https://catalog.data.gov/)

Many public and private data repositories are powered by CKAN.

CKAN is currently not yet built on an RDF triplestore.

There are Docker Dockerfiles for CKAN.

Protégé¶

Protégé is a knowledge management software application with support for RDF, OWL, and a few different reasoners.

Web Protégé is a web-based version of Protégé with many similar features.

Protégé is a Free and Open Source software tool.

RDFJS¶

RDFJS (RDF Javascript) is an acronym for referring to tools for working with RDF in the Javascript programming language.

See:

- ref:

RDFHDT¶

RDFHDT (RDF Header Dictionary Triples) is an optimized binary format for storing and working with very many triples in highly compressed form.

HDT-IT is a software application for working with RDFHDT datasets:

RDFLib¶

RDFLib is a library (and a collection of companion libraries) for working with RDF in the Python programming language.

Semantic Web Schema Resources¶

prefix.cc¶

Lookup RDF vocabularies, classes, and properties

LOV¶

LOV (“Linked Open Vocabularies”) is a web application for cataloging and viewing metadata of and links between vocabularies (RDF, RDFS, OWL)

All of the vocabularies stored in LOV as a bubble chart:

LOV has a “suggest a vocabulary” feature

Many of the vocabularies stored in LOV can also be searched or looked up from prefix.cc.

URIs for Units¶

LODCloud¶

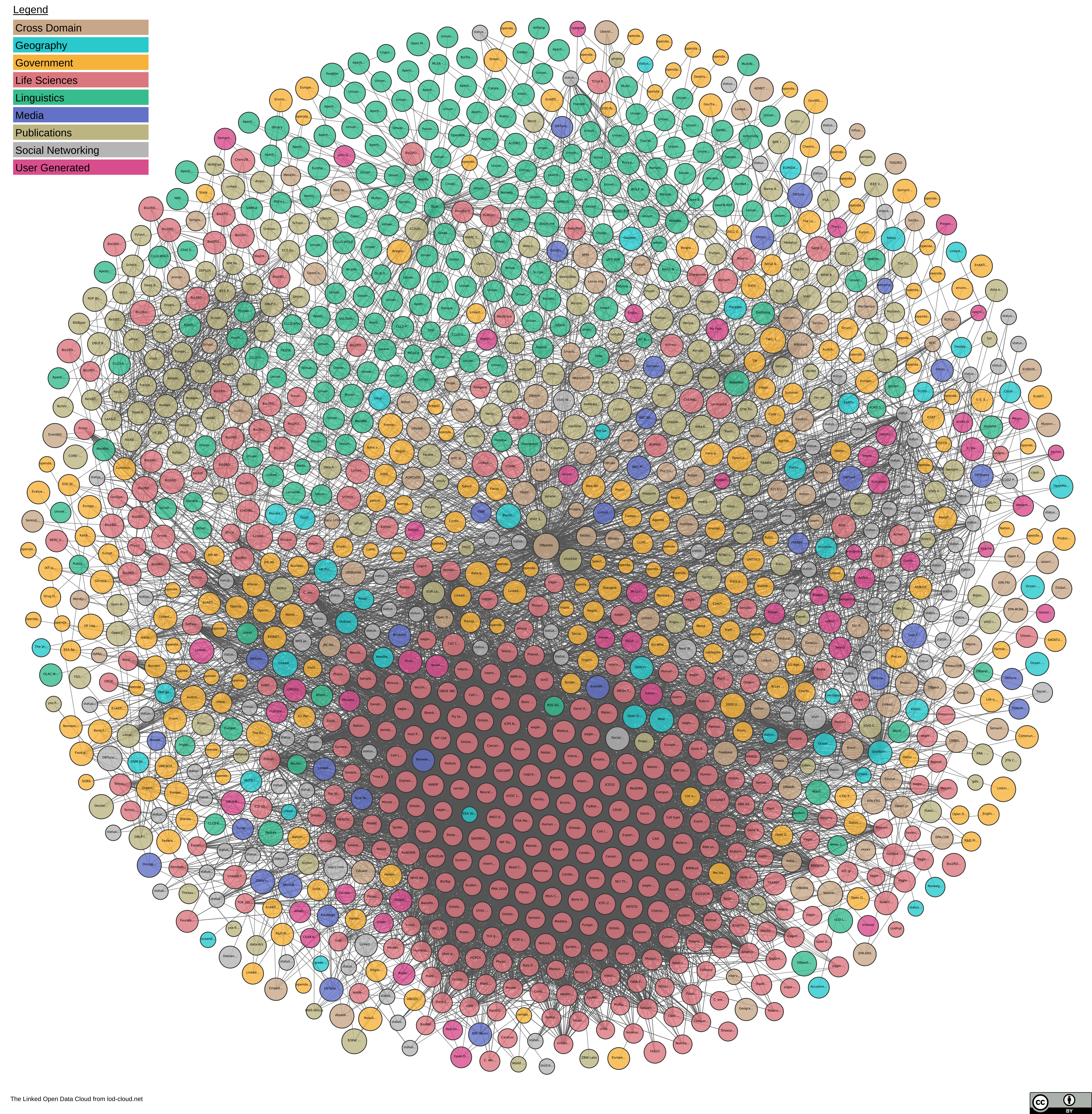

The LOD (“Linking Open Data”) cloud diagram visualizes the nodes and edges of the Linked Open Data Cloud

You can add datasets to the LODCloud: https://lod-cloud.net/add-dataset